Real World Multi-Agent Reinforcement Learning – Latest Developments and Applications

March 31, 2025

March 31, 2025

By Sriram Ganapathi Subramanian

The decision by the Association for Computing Machinery to give the 2024 ACM A.M. Turing Award to Richard Sutton and Andrew Barto recognized the important role that reinforcement learning (RL) — a field they helped establish — plays today. Some of the most popular large language models (LLMs) like ChatGPT and DeepSeek, extensively use RL principles and algorithms. It is also widely applied in robotics, autonomous vehicles, and healthcare.

RL agent’s objective is to find near-optimal behaviours for sequential decision-making tasks using weak (real-valued) signals called rewards. This contrasts with supervised learning, where the decisions are made in one shot with clear signals about the correct answer. This trial-and-error based experiential learning capability of RL makes it closer to the learning processes observed in humans and all other animals. Classic RL algorithms typically assume that there is a single learning agent in the system, with no other entities in the environment capable of agency. However, real-world settings usually have more than one agent. For example, in autonomous driving, agents have to constantly reason about other drivers on the road.

Multi-agent reinforcement learning (MARL), an emerging subfield, relaxes this assumption, and considers learning in environments containing more than one autonomous agent. Although there have been multiple successes with MARL algorithms in the past decade, they have yet to find wide application in large-scale real-world problems for two important reasons. First, these algorithms have poor sample efficiency. Second, these algorithms are not scalable to environments with many agents since, typically, they are exponential in the number of agents in the environment in both time and space complexity.

My research broadly aims to address these limitations and accelerate the deployment of MARL in a variety of real-world settings including fighting wildland fires, smart grid utility management, and autonomous driving. My long-term objective is to achieve such a wide real-world deployment of MARL, which is being realized through the pursuit of three short-term objectives:

Sample efficiency is the problem of learning effectively from every data sample. Each data sample in RL/MARL constitutes an experience for the agent interacting with the environment. MARL algorithms usually need millions of data samples to learn at the level of human performance, while humans can learn such policies using only a few samples. For example, the AlphaStar algorithm requires 200 years of gameplay data to learn good policies. Another example is the OpenAI Five algorithm, which is trained on 180 years of gameplay data involving computational infrastructure consisting of 256 GPUs and 128,000 CPU cores. In many real-world domains, this is a critical issue; there is a relative paucity of data for agents to learn effectively.

Prior research recommended different approaches to improving the sample efficiency of MARL methods. One recommendation is to use additional rewards (called exploration rewards) to encourage learning certain behaviours that are then reduced over time. Other recommendations include adding an entropy loss for the policy to discourage convergence to a local optimum and using intrinsic motivation for exploration. While these approaches have their merits, recent studies show that exploration rewards and entropy loss may change the optimal policy of the original problem (unless handcrafted carefully for each environment), and intrinsic motivation may not be effective in several multi-agent scenarios. Further, all of these approaches train MARL algorithms from scratch in each environment, which may be inefficient.

My research takes a different approach. An important observation in this context is that many real-world environments already, in practice, deploy possibly sub-optimal, hand-crafted (using physics-based rules), or heuristic approaches for generating policies. A useful possibility that arises is making the best use of such approaches as advisors to help improve MARL training. Our prior work explored learning from external sources of knowledge in MARL. However, these works have several stringent assumptions that prevent their application in practical environments. Our previous research provided a principled framework for incorporating action recommendations from a general class of online, possibly sub-optimal advisors in non-restrictive multi-agent general-sum settings, in the case of having a single advisor and multiple advisors. Our prior research also provided new RL algorithms for continuous control that can leverage multiple advisors. Separately, we conducted elaborate theoretical studies on this approach, providing guarantees of performance and stability. Some examples of advisors include driving models in autonomous driving, physics-based spread models in wildland fires, and mathematical marketing models for product marketing. Advisors could also be humans with prior knowledge in these domains. While my prior work was restricted to obtaining action recommendations from the advisors, my current ongoing work is studying the effects of a large class of multi-agent transfer methods (through advisors), such as transfer through value functions, reward shaping, and policy.

Traditionally, MARL algorithms were exponentially dependent on the number of agents in both time and space complexities. Hence, these algorithms are intractable in environments with many agents. In the literature, this problem has been referred to as the curse of dimensionality.

Three different types of solutions have been proposed for scaling MARL algorithms: independent learning (IL), parameter sharing (PS), and mean-field methods. Each of these has their advantages and disadvantages. IL methods consider all other agents to be part of the environment and do not model other agents, which makes them scalable. While simple and effective in some situations, these methods are not theoretically sound, hence it is not entirely clear which multi-agent environments or situations are best suited for IL methods. PS methods use a single network for training, whose parameters are shared across all the agents. These methods are only applicable in cooperative environments with a set of homogeneous agents. Mean-field methods, use the mean-field theory to abstract other agents in the environment by a virtual mean agent. While effective, these methods suffer from several stringent assumptions such as requiring fully homogeneous agents, full observability of the environment, and centralized learning settings, that prevent their wide application in practical environments.

My previous work has contributed to improving these methods by addressing existing limitations and making these methods more widely applicable. For IL, our prior research provided an elaborate experimental analysis elucidating the strengths and weaknesses of IL methods in cooperative, competitive, and mixed-motive multi-agent environments. We showed that IL methods are as effective as multi-agent learning methods in several cooperative and competitive environments, but suffer in mixed-motive environments. Regarding PS methods, our prior work recommended novel communication protocols for agents to share information while using distinct and independent networks for training. For mean-field methods, our prior work relaxed each one of the restrictive assumptions. First, we relaxed the assumption of requiring fully homogeneous agents by using type classification. Next, we provided novel mean-field algorithms that can operate efficiently in partially observable environments. Further, we also provided novel mean-field algorithms that can learn good policies using fully decentralized learning protocols. However, mean-field methods continue to have some limitations, such as assuming every agent impacts other agents in the same way, and showing unstable learning behaviours, which I am addressing in my current ongoing research.

My research studies the effectiveness of RL and MARL solutions in a set of real-world domains as a proof-of-concept: fighting wildland fires, material discovery, and autonomous driving.

In the domain of wildland fires, our previous research looked at the application of RL in improving existing spatial fire simulation models and preparing better fire-fighting strategies for two large wildfire events in Alberta: the Fort McMurray Fire and the Richardson Fire. Further, other researchers released a detailed Fire Commander wildfire simulator that simulates the effect of wildfire intervention strategies and reports burn metrics. While this simulator was used to test performances of MARL algorithms in hand-crafted toy wildfire environments, there were no attempts to study MARL performances in wildfire control scenarios pertaining to a real-world fire event. My current ongoing work looks at such a large-scale study. Further, we have published a detailed survey on the application of machine learning algorithms in the domain of fighting wildland fires along with a detailed list of future possibilities highlighting untapped potential in this line of work. This survey article has received considerable attention accumulating hundreds of citations in a short period.

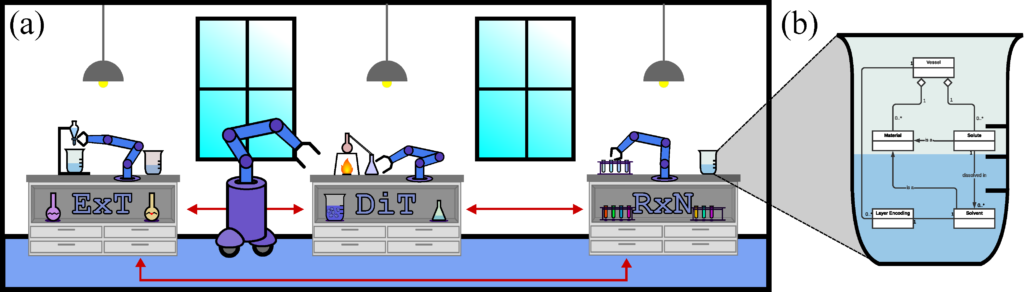

In material discovery, prior work has used RL techniques for searching the large combinatorial chemical space, but reported difficulties in obtaining enough data samples for RL training. To address this difficulty, we have recently open-sourced a Chemistry Gym simulator that simulates the effect of different chemical reactions and is an effective tool for training RL/MARL algorithms to handle chemical reagents. Also, we provided a fundamentally different form of RL, i.e., a maximum reward formulation, which is more relevant for material discovery compared to the standard formulation that uses the expected cumulative rewards.

For autonomous driving, there are reported benefits to using single-agent RL techniques. Though driving is a multi-agent environment with multiple different vehicles on the road at the same time, prior work was significantly restricted to using independent techniques. Our prior work has open-sourced multiple MARL driving testbeds, and provided new MARL algorithms for autonomous driving. These algorithms vastly outperformed independent techniques. Further, we performed a large study on the potential use of RL for learning constraints on German highway environments and provided novel RL algorithms for improving autonomous decisions in difficult road conditions such as high-traffic zones, low-visibility zones, and routes with rough weather conditions. My ongoing work is further improving MARL capabilities in both autonomous driving and material discovery, with the aim of achieving wide MARL deployment in both these domains.

MARL algorithms have the potential to train autonomous agents and deploy them in shared environments with different types of other agents. These agents can achieve their objectives by collaborating and/or competing with other agents (including those that they may have never seen previously during training). After extensive training on complex environments having diverse opponents, scenarios, and objectives, these agents will be capable of learning to autonomously navigate dynamically changing environments, efficiently generalize to new situations, and plan ahead on the basis of uncertainty in the outcome of their actions/strategies. Notably, MARL algorithms have also demonstrated success in partially observable environments where they can effectively exploit limited information captured by the agent’s sensors.

Deploying MARL in the real-world has several benefits in a wide range of application areas. For safety-critical applications like fighting wildland fires, reliably deploying multiple robots capable of fighting the fire autonomously could reduce the number of human firefighters on the ground, saving lives. In areas like health care, where there is a shortage of human labour, autonomous agents can help improve efficiency. For example, it could be possible to see increased numbers of robot-assisted surgeries, care robots for the elderly, and robotic nursing assistants. Many other application areas such as finance, sustainability, and autonomous driving can also benefit from MARL algorithms, where robots can help automate operations, improve efficiency, and reduce costs.

From a technical standpoint, two major limitations are preventing a wide deployment of MARL algorithms in real-world problems. Due to these limitations, MARL has been traditionally focused on simple toy problems involving two (or tens) of agents with a restrictive set of applications. My research recommends novel solutions to these limitations and directly explores the effectiveness of these solutions in certain real-world applications to serve as proof of concepts. It is closing the gap between academic successes in MARL and real-world deployment.