AI trust and safety

At Vector Institute, we believe that realizing the transformative potential of artificial intelligence requires a steadfast commitment to trust and safety.

Our north star? Set a global standard for AI – so that wherever AI is used, it can be trusted, safe and aligned with human values.

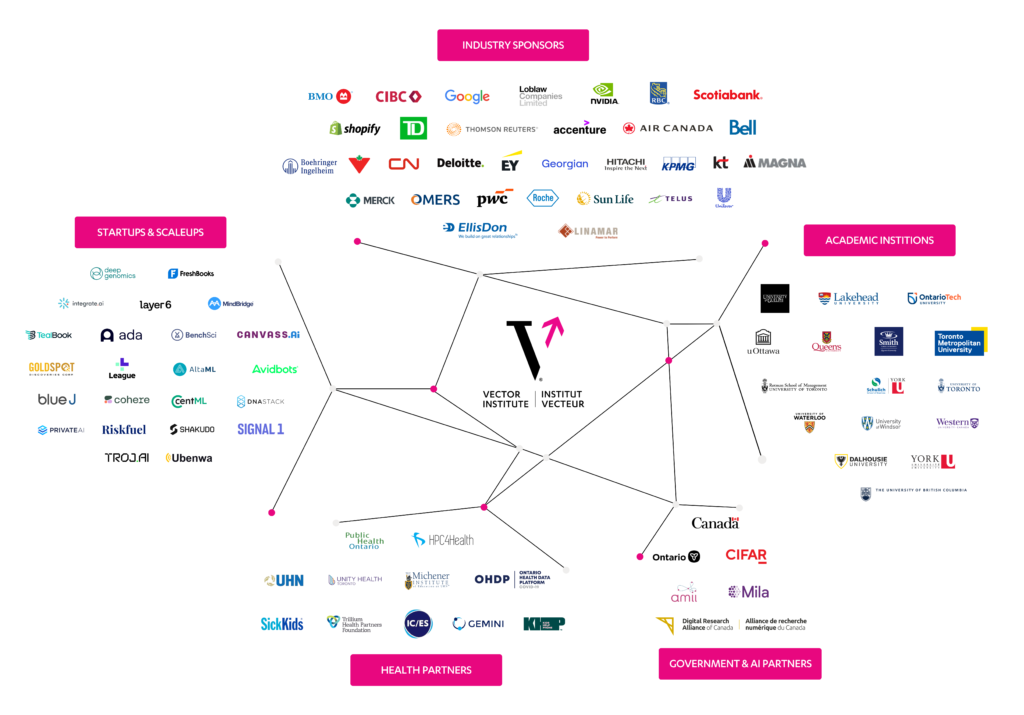

Setting a global standard for safe and trusted AI harnessing Ontario’s world class AI ecosystem

Vector is leading the charge in responsible AI adoption, serving as a counter-balance, democratizing AI for the benefit of society by focusing on AI safety research and tools that enable organizations to deploy AI ethically. Through partnerships with world-renowned research hospitals and academic institutions in Toronto, the birthplace of modern machine learning and heart of the economic centre for Canada, Vector brings together the brightest minds to advance fundamental and applied research in AI safety.

Through this collective, Vector released AI Trust & Safety Principles, and continues advancing cutting-edge research, applied tools and frameworks, helping organizations – put principles into practice.

Get insights on

AI Trust & Safety Principles

At Vector Institute, trust and safety are at the core of everything we do. Our AI Trust & Safety Principles, developed in collaboration with partners across industry, government and academia, provide clear guideposts for the responsible development and deployment of AI:

- AI should benefit humans and the planet

- AI systems should reflect democratic values

- AI must respect privacy and security

- AI should be robust, secure and safe

- AI oversight requires responsible disclosure

- Organizations developing AI must be accountable

Here’s what Vector experts have to say

“A ‘huge effort’ should be made to protect the technology from bad actors, and toward training artificial intelligence to be more benevolent.”

“We are in a truly transformative moment. It’s a really exciting time… but also a time for some care and some risk…we’re in a little bit of the wild west.”

“We are on a path towards a world in which machines can do literally everything better than us. Before we get there, we need to make sure that our civilization has strong and stable incentives to keep us happy and healthy, even when we’re a net drag on growth.”

“With AI progressing as rapidly as it is, we need to foresee and prevent catastrophic risks that arise from future AI systems. We can’t afford to wait until things go wrong.”

Safe AI leadership: The Canadian way

Vector transforms AI Trust & Safety Principles into real-world practice through:

- Our leading voice in shaping the global conversation around AI governance and ethics

- Working on AI alignment, robustness, privacy and security across multiple sectors

- Applied research and engineering of tools and frameworks to enable responsible AI adoption including; evaluation, modeling and benchmarking and more

- Sector-specific frameworks, guidance and collaborative projects in health, financial services, government and more

We work with global leaders like SRI, the OECD, WEF, and governments to define standards and best practices for trustworthy AI

Explore related trust and safety articles

Neutralizing Bias in AI: Vector Institute’s UnBIAS Framework Revolutionizes Ethical Text Analysis

How to safely implement AI systems

Fairness in Machine Learning: The Principles of Governance

Want to stay up-to-date on Vector’s work in AI trust and safety?

Join Vector’s mailing list here: