Researchers and professionals from coast to coast descend on Vector for first Research Symposium and Job Fair

March 25, 2019

March 25, 2019

On Friday, February 22, 2019, the Vector Institute held its first-ever Research Symposium and Job Fair – one of the largest gatherings of machine learning talent across Canada. The one-day event showcased top research produced by Vector researchers over the last year. The event was an opportunity for local master’s students, PhD students and post-docs in the fields of machine learning and AI more broadly to connect with Vector’s industry and health partners and to discover a wide range of internship and career opportunities. The event, tailored for Vector’s indsutry sponsors and research community, had representatives from 20 Vector industry sponsors and health partners and over 300 attendees.

The symposium featured a presentation by Vector Faculty Member, David Duvenaud, on Neural Ordinary Differential Equations, work that was recognized with a Best Paper Award at NeurIPS 2018, which is one of the world’s largest flagship machine learning conferences. Hassan Ashtiani, previously a Postgraduate Affiliate at Vector, gave a presentation on Settling the sample complexity of GMMs via Compression Schemes.

Vector Research Symposium & Job Fair by the numbers

For job-seekers and researchers with expertise in machine learning and AI from across Canada, Vector’s Research Symposium & Job Fair was the place to be to expand their networks and meet potential collaborators or employers. Attendees included students and faculty from institutions across Canada and abroad, including:

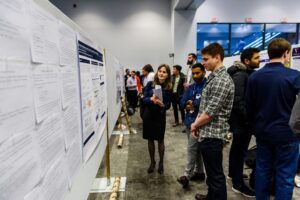

In addition to the presentations, 56 research posters were on display, featuring work published by Vector researchers and the surrounding AI community in 2018. Many posters featured research that had been accepted by world-renowned conferences and journals and included topics ranging from classifying cancer types and animal identification to high accuracy trajectory tracking. For industry attendees, the poster sessions were an exclusive opportunity to gain exposure to a large concentration of Vector’s local machine learning faculty, graduate students and affiliates working on the latest machine learning advancements.

Throughout the day, students were also able to network with Canadian-based enterprises and startups seeking to hire machine learning and AI talent locally — an exclusive opportunity for Vector’s industry sponsors. Job openings ranged from opportunities in data science, analytics, and engineering, to project management.

Several of the Vector Institute’s industry sponsors and health partners who are at the forefront of AI adoption in Canada were present the Job Fair, including:

To wrap up the day, there was a lively panel discussion with Craig Boutilier (NeurIPS 2018 Best Paper winner, Google), Sheila McIlraith (University of Toronto and Vector Faculty Affiliate), Brendan Frey (Vector Co-founder, Deep Genomics), and Jamie Kiros (Google Brain), moderated by Vector’s own Research Director, Richard Zemel.

The panel explored big challenges facing machine learning and where the next breakthroughs will come from, diving into topics such as hybrid approaches to deep learning research, interpretability, and ethical AI.

Panel Highlights

The big challenges facing machine learning

The main theme of the panel was how ethics is currently lagging behind recent technological advancements. It was discussed how one of the causes is due to machine learning research being siloed from other fields of study where AI is applied, which presents multiple hurdles when conducting research. According to the panelists, another challenge facing machine learning is the fact that interactions in models are not very intelligent, and understanding how to have natural interactions remains a big challenge in research.

Hybrid approaches to deep learning research (i.e., probabilistic models and logical AI)

Panelists explained that a lot of machine learning techniques take advantage of hybrid approaches that include neural nets as a component. The panel also discussed how, in general, the research community does not talk enough about topics such as algorithms supporting real world decision-making and how machine translation revolutionized the field.

Interpretability

With respect to interpretability, the panel discussed how trust and control are the reasons behind the need for interpretability and how we usually rationalize decisions post-hoc as humans. This led to the question of a researcher’s ability/inability to know exactly what is happening in a model. In addition, one panelist explained that when creating a model, the creator should be able to explain decisions made on behalf of the user. Towards the end of the discussion, it was noted that researchers should have the end user in mind when thinking of what interpretability means because it can change in different contexts.

Ethical AI

The panelists talked about how it is important for researchers to have a solid belief architecture to orient them when designing algorithms.

It was also noted how ethical considerations are not unique to AI. Rather, it is an important issue in other fields, including computer science more broadly. To that end, the panelists expressed that researchers have a responsibility to educate students and build towards a better future for humanity. It was also noted that when faced with hard decisions on what should be researched, and questions about possible negative applications of a model, there is uncertainty about where a line should be drawn to account for a curiosity-danger tradeoff.

Ending on a positive note, the panel expressed that we now have better tools than ever before to take a shot at tackling the big challenges that were discussed. In response to an audience question, it was mentioned that although researchers should participate in an advisory role in public policy discussions, it should not be exclusively the scientific community that gates usage of models in decision-making.