ICML 2023: Developing an adaptive computation model for multidimensional generative tasks

October 25, 2023

October 25, 2023

By Natasha Ali

A new paper co-authored by Vector Institute Faculty Member David Fleet introduces an adaptive computation model capable of representing multidimensional visual data and generating realistic images and videos.

“Scalable Adaptive Computation for Iterative Generation,” was among the papers accepted at the 2023 proceedings of the ICML (International Conference on Machine Learning), which showcased outstanding research by distinguished members of Vector’s research community. Held from July 23 to July 29, the conference featured 21 papers co-authored by Vector Institute Faculty Members, Faculty Affiliates, and Postdoctoral Fellows.

As a popular generative artificial intelligence technique, adaptive computation allows neural networks to adaptively process information based on changing input, evaluate their existing methodology, and improve their performance with each subsequent data point. At its core, it ensures that deep learning models continue to advance their predictive properties and adjust their functions as they are exposed to new conditions, ultimately operating at a dynamic capacity similar to the human brain.

Previous generative models have only been able to process fixed data units such as image pixels or image patches. With realistic images and videos, however, data tends to be unevenly localized across the entire visual space, creating clusters of complex data in areas that contain visually elaborate objects or textures.

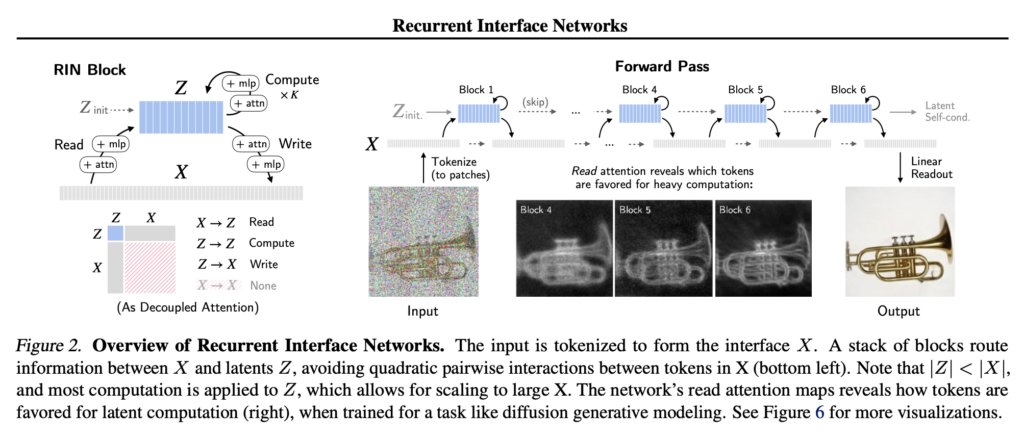

This is where Recurrent Interface Networks (RINs) come in. This newly developed adaptive model relieves some of the issues associated with complex generative tasks. Using a dual unit system, RINs designate individual data points to interface (X) and latent (Z) spaces, initiating back-and-forth exchanges between the two spaces and enabling neural networks to process uneven spaces more efficiently.

Immediately connected to the input datasets, the interface space adaptively responds to changes in input size and variations in data clustering, expanding to thousands of data points as necessary and leaving the overall computation capacity relatively unchanged. It is often involved in processing static visual spaces that require minimal computation. Unlike its counterpart, the latent space operates independently from the scale of the input data. As the computation powerhouse, it is responsible for specialized, high-capacity computing and labeling tasks in clustered regions.

The process of training RINs to identify visual components consisted of interface initialization, followed by latent initialization and block stacking. As the first point of contact with the input, interface units converted images or videos into a set of patch tokens – a series of identifier vectors that carry information about an image component such as size, relative location, and texture. Contrary to interface initialization, latent initialization involved specialized vectors with advanced predictive capabilities and learned behaviour.

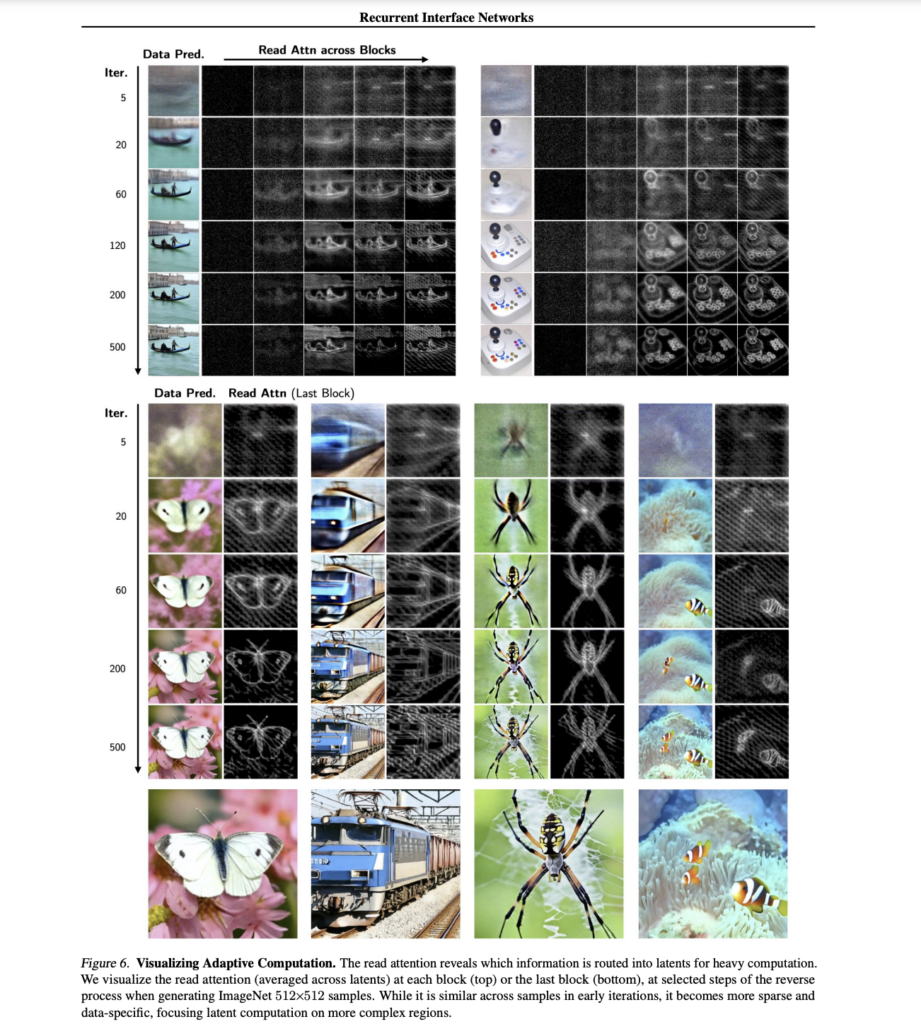

Following preliminary data processing, the interface and latent spaces periodically exchanged datasets, as latents accumulated essential computational information from interfaces. Read attention maps were used to allocate a quantitative score to each data point and locate important regions, prioritizing certain units as “compute heavy” and signaling their transfer to the latent space. This recurring process led to the formation of RIN block stacks, which were then analyzed and reshaped to create the desired visual output.

To address issues with data inconsistency and possible detachment between the two spaces, Fleet and his co-authors proposed an iterative generation mechanism, in which previously computed data points were carried over – in iterations – across different latent units and used to build on existing information.

The idea was to “warm-start” latent units such that earlier datasets served as initial blueprints for subsequent computation and data processing tasks. By exposing the latents to prior datasets, new latent units could rapidly acclimate to the predictive properties of the current model, resulting in effective information routing between the spaces and within the neural network as a whole.

As a novel computation model, Recurrent Interface Networks (RINs) can successfully tag and recreate multidimensional visual information. Using an innovative binary system, this adaptive computation model can isolate data points based on their perceived compute capacity and expedite the data labeling and analysis process, leading to the swift generation of elaborate images and videos.

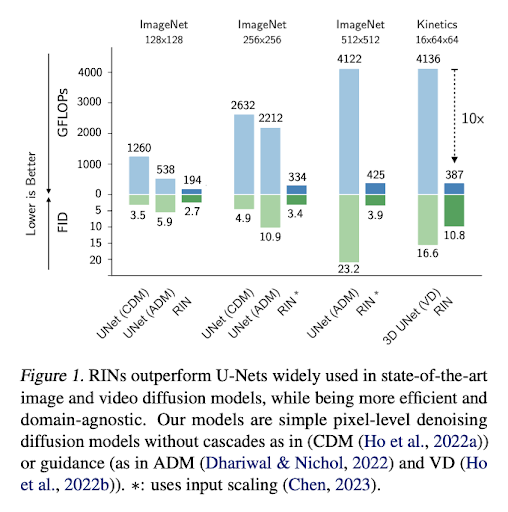

After comparing RINs to well-established adaptive generation models, Fleet and his co-authors demonstrated the superior performance of RINs in adaptively identifying and tagging spatial fluctuations in images and videos. The groundbreaking adaptive model outperformed numerous generative models as a robust and dynamic computational tool and provided promising results for generative artificial intelligence in visually complex tasks.

This notable paper is a stepping stone to building more powerful generative AI models and facilitating the transition of generative technology in the workplace. With the growing interest in generative AI, the Vector Institute continues to expand its research initiatives that aim to boost existing techniques and further Toronto’s reputation as an AI hub for pioneering AI research.

Sources:

Graves, Alex. (2017). Adaptive Computation Time for Recurrent Neural Networks. Arvix. https://arxiv.org/abs/1603.08983